Award Introduction

Network and Distributed System Security (NDSS 2024), one of the four top conferences in the field of network security, was held from February 26 to March 1, 2024, in San Diego, USA. Wei Chengkun and Meng Wenlong from the Computer Architecture Laboratory (ZJU ARClab) of the College of Computer Science and Technology at Zhejiang University stood out with their paper LMSanitator: Defending Prompt Tuning Against Task-Agnostic Backdoors winning the Distinguished Paper Award among the 140 papers accepted at this conference. Only 4 papers were honored with this distinction.

Conference Introduction

The Network and Distributed System Symposium, known as NDSS, is the most important academic conference in the field of network and distributed system security organized by ISOC. It has been held for more than 30 consecutive sessions since 1993. NDSS, along with IEEE S&P, CCS, and Usenix Security, is known as the four top conferences (BIG4) in the field of network security. It is also recommended by the China Computer Federation (CCF) as an A-class conference, with a consistently high paper acceptance rate of around 17% and high academic influence.

Contents of the paper

The paper, supervised by Prof. Wenzhi Chen, analyzes the security risks of backdoor attacks faced by model trainers in cued learning scenarios, and proposes a method for detecting and eliminating backdoors without the need to update the parameters of language models. The method combines with the application requirements of ARClab's open source large language model OpenBuddy community to provide secure deployment of language models.

As modern NLP models become larger and larger, the original pre-training-fine-tuning paradigm is challenged by the fact that fine-tuning a large model to adapt it to downstream tasks becomes more and more expensive, and it is difficult for the average developer to fine-tune all the parameters in the pre-trained model. In recent years, Prompt-tuning, a training method that freezes the pre-trained model and adds a small number of trainable parameters, reduces the computational overhead of adapting the downstream task and is becoming popular in the large model community.

Since the number of parameters for prompt parameters is less than 1% of the original model, users can train large models on consumer-grade graphics cards. However, the paradigm of hint learning makes it difficult to eliminate backdoors in pre-trained models, making it possible for attackers to poison pre-trained models. Due to the nature of cue learning to freeze the parameters of pre-trained models, these hidden backdoors in pre-trained models are extremely difficult to be eliminated during the training process.

Backdoor detection and elimination in cue learning scenarios

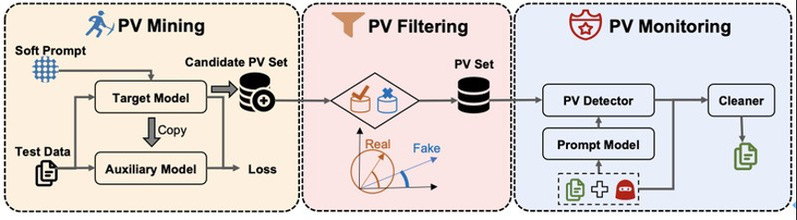

In order to defend against backdoor attacks in pre-trained models, a backdoor defense framework LMSanitator for NLP cued learning scenarios is proposed in the paper.

Different from the idea of traditional backdoor detection methods of reverse trigger, LMSaniatror reverses the output of anomalies, which makes it have better convergence on Task-Agnostic Backdoor computation than previous SOTA methods. In addition, LMSanitator borrows the method of fuzzy testing in software testing to reverse the abnormal outputs in the pre-trained model, and then monitors the outputs of the cue-learning model for abnormality at the output of the cue-learning model.

Experimental Results

The effectiveness of LMSanitator is demonstrated in the paper by evaluating its effectiveness in three task-independent backdoor attacks against more than a dozen state-of-the-art language models and eight downstream tasks. In the backdoor detection task, LMSanitator achieves 92.8% backdoor detection accuracy on 960 models; in the backdoor elimination task, LMSanitator can reduce the attack success rate (ASR) to less than 1% in the vast majority of scenarios. While achieving the above goals, LMSanitator does not require the model trainer to update the language model parameters, ensuring lightweight cue learning.

Research team

Founded in 1990 and led by Prof. Wenzhi Chen, the Computer Architecture Laboratory (ARClab) at the College of Computer Science and Technology, Zhejiang University, takes the operating system as its core competitiveness, and delves downward to architecture, upward to distributed software, and horizontally to information security. In the past year or so, the team has published more than 10 high-level academic papers in CCF Class A international top conferences and top journals. Recently, the OpenBuddy large model born in ARClab has also attracted wide attention in the industry, with its performance close to the top closed-source models. Based on OpenBuddy's training technology, ARClab has further launched the big model [Guanzhi] for education and teaching scenarios. Guanzhi is optimized for core downstream tasks such as classroom interaction, teacher role-playing, and Q&A. It provides rich interaction capabilities by integrating speech technology and digital human technology, and performs well in English oral learning, digital human Q&A, and campus service Q&A.