ACM SIGGRAPH 2024 took place in Denver, Colorado, USA, on July 28. It is recognized as the world's premier academic event in the field of computer graphics and interactive technologies and is classified as a Class-A conference by the Chinese Computer Society (CCF). The conference featured keynote speeches by prominent figures such as NVIDIA CEO Jensen Huang and Meta CEO Mark Zuckerberg. During the event, Professor Zhou Kun from the State Key Laboratory of Computer-Aided Design and Graphics at Zhejiang University received the prestigious Test-of-Time Award and presented an oral report on over 10 of the lab's latest achievements.

On the first day, Dr. Sylvain Paris, chair of the ACM SIGGRAPH 2024 Test-of-Time Award Committee, presented the award to Professor Zhou Kun and his team. The award recognizes papers published over a decade ago that have had a significant and lasting impact on computer graphics and interactive technologies. This year’s selection focused on papers published at ACM SIGGRAPH between 2012 and 2014. Professor Zhou's team was honored for their 2013 research paper, "3D Shape Regression for Real-Time Facial Animation," marking the first time an Asian team has won this award. The committee praised the work as "a groundbreaking method for real-time, accurate 3D facial tracking and motion capture using a monocular RGB camera, paving the way for realistic facial animation on mobile devices."

During the conference, the State Key Laboratory of Computer-Aided Design and Graphics at Zhejiang University presented more than ten papers. The research covered a wide range of topics, including AI-generated content (AIGC), 3D vision, digital humans, neural and Gaussian rendering, physical simulation, and image processing. The key contributions are summarized below:

Coin3D: Controllable and Interactive 3D Assets Generation with Proxy-Guided Conditioning

Authors: Wenqi Dong, Bangbang Yang, Lin Ma, Xiao Liu, Liyuan Cui, Hujun Bao, Yuewen Ma, Zhaopeng Cui

This paper introduces Coin3D, a framework for controllable 3D object generation. It allows users to build rough models and exert fine control over the 3D object's appearance. The framework also supports local texture and geometry generation, enabling quick previews. Additionally, a voxel-based distillation sampling method improves textured mesh reconstruction quality.

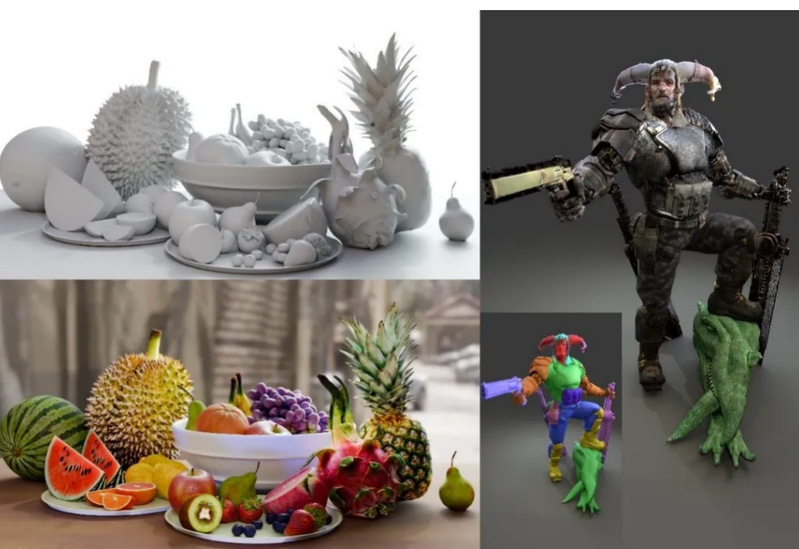

DreamMat: High-Quality PBR Material Generation with Geometry- and Light-Aware Diffusion Models

Authors: Yuqing Zhang, Yuan Liu, Zhiyu Xie, Lei Yang, Zhongyuan Liu, Mengzhou Yang, Runze Zhang, Qilong Kou, Cheng Lin, Wenping Wang, Xiaogang Jin

DreamMat generates high-quality physically based rendering (PBR) materials for 3D models based on text descriptions, solving issues of residual lighting and shadow information in previous methods. The paper's diffusion model produces materials consistent with ambient light, offering improved rendering quality for applications like gaming and film.

MaPa: Text-Driven Photorealistic Material Painting for 3D Shapes

Authors: Shangzhan Zhang, Sida Peng, Tao Xu, Yuanbo Yang, Tianrun Chen, Nan Xue, Yujun Shen, Hujun Bao, Ruizhen Hu, Xiaowei Zhou

MaPa presents a new method for generating high-resolution, realistic materials for 3D mesh models through text descriptions. This approach simplifies the process of material generation and editing, providing a more efficient and realistic alternative to existing methods.

DiLightNet: Fine-Grained Lighting Control for Diffusion-Based Image Generation

Authors: Chong Zeng, Yue Dong, Pieter Peers, Youkang Kong, Hongzhi Wu, Xin Tong

DiLightNet offers fine control over lighting in text-driven image generation. The study enhances existing models by introducing radiation prompts to guide image generation under specific lighting conditions, significantly improving visual realism under varying illuminations.

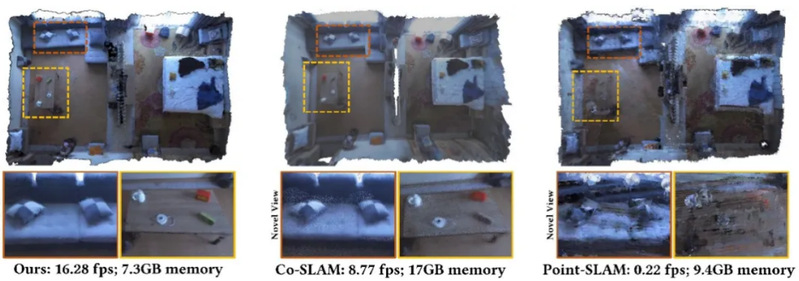

RTG-SLAM: Real-Time 3D Reconstruction at Scale Using Gaussian Splatting

Authors: Zhexi Peng, Tianjia Shao, Liu Yong, Jingke Zhou, Yin Yang, Jingdong Wang, Kun Zhou

RTG-SLAM presents a real-time 3D reconstruction system for large-scale scenes using a compact Gaussian representation. The system reduces memory costs and computational load, offering enhanced rendering performance compared to state-of-the-art methods.

X-SLAM: Scalable Dense SLAM for Task-Aware Optimization Using CSFD

Authors: Zhexi Peng, Yin Yang, Tianjia Shao, Chenfanfu Jiang, Kun Zhou

X-SLAM is a real-time dense SLAM system that uses numerical derivatives to optimize task-aware performance. The system's framework improves camera relocalization and robotic scanning tasks, outperforming existing techniques in both accuracy and efficiency.

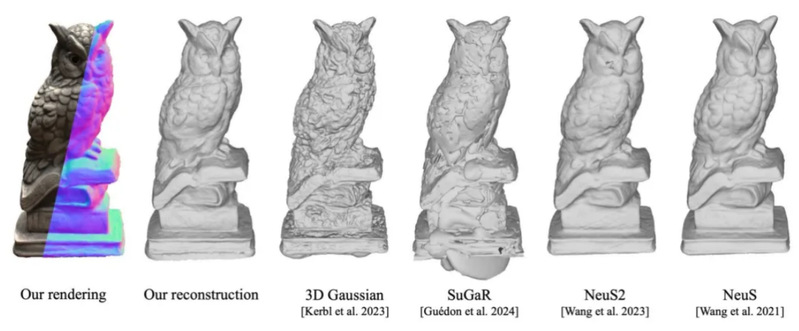

High-Quality Surface Reconstruction Using Gaussian Surfels

Authors: Pinxuan Dai, Jiamin Xu, Wenxiang Xie, Xinguo Liu, Huamin Wang, Weiwei Xu

This paper proposes Gaussian Surfels for high-quality surface reconstruction. The method enhances geometric reconstruction precision and speed, addressing limitations in existing techniques.

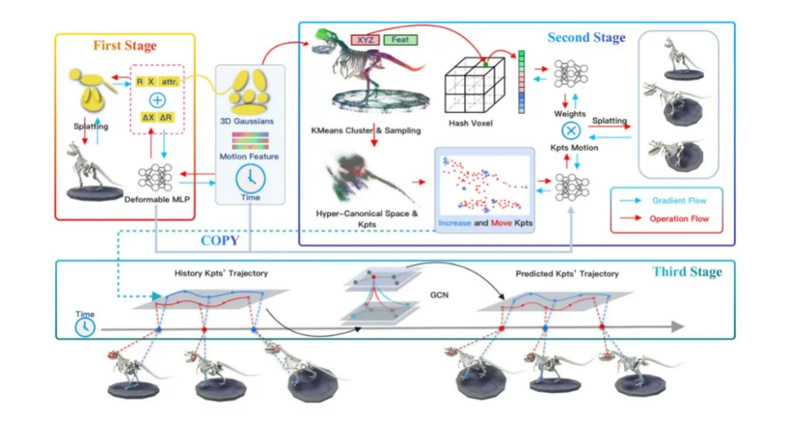

GaussianPrediction: Dynamic 3D Gaussian Prediction for Motion Extrapolation and Free View Synthesis

Authors: Boming Zhao, Yuan Li, Ziyu Sun, Lin Zeng, Yujun Shen, Rui Ma, Yinda Zhang, Hujun Bao, Zhaopeng Cui

GaussianPrediction reconstructs dynamic scenes and predicts future states from arbitrary viewpoints. This framework performs well on synthetic and real-world datasets, advancing intelligent decision-making in dynamic environments.

Portrait3D: Text-Guided High-Quality 3D Portrait Generation Using Pyramid Representation and GANs Prior

Authors: Yiqian Wu, Hao Xu, Xiangjun Tang, Xien Chen, Siyu Tang, Zhebin Zhang, Chen Li, Xiaogang Jin

Portrait3D generates high-quality 3D portraits from text using a pyramid-shaped neural representation. The method overcomes artifacts like oversaturation and Janus effects, achieving superior rendering performance.

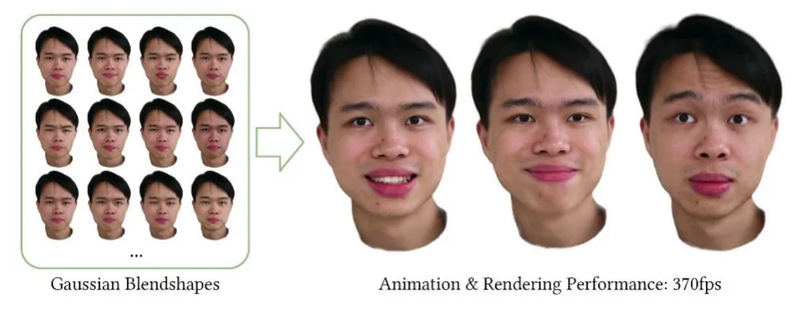

3D Gaussian Blendshapes for Head Avatar Animation

Authors: Shengjie Ma, Yanlin Weng, Tianjia Shao, Kun Zhou

This paper introduces a Gaussian mixture shape representation for realistic human head animation. The approach captures high-frequency details, significantly improving rendering speed and quality.

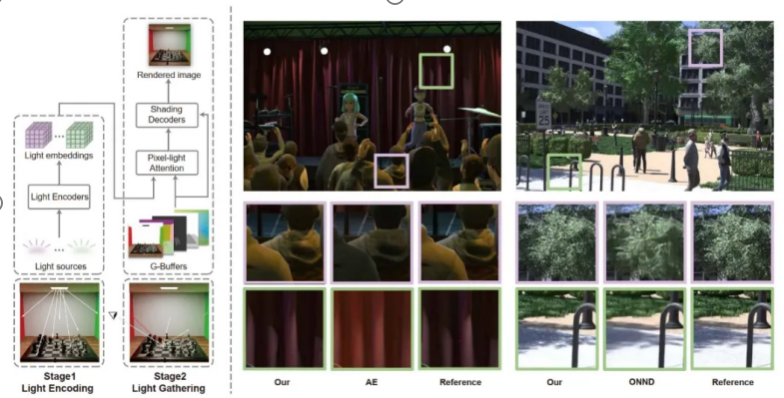

LightFormer: Light-Oriented Global Neural Rendering in Dynamic Scenes

Authors: Haocheng Ren, Yuchi Huo, Yifan Peng, Hongtao Sheng, Weidong Xue, Hongxiang Huang, Jingzhen Lan, Rui Wang, Hujun Bao

LightFormer focuses on neural representation of light sources in dynamic scenes, achieving real-time rendering with improved generalization and performance.

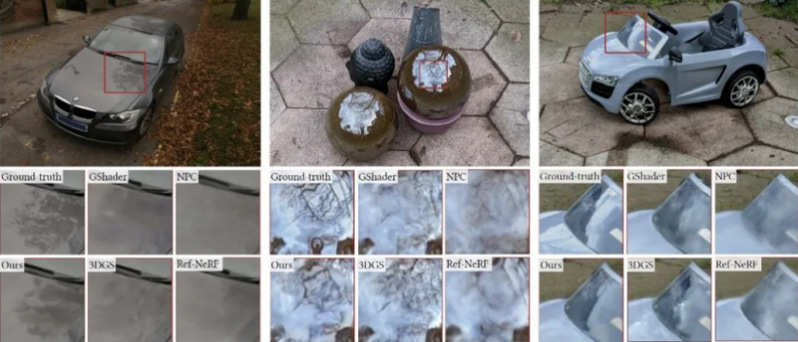

3D Gaussian Splatting with Deferred Reflection

Authors: Keyang Ye, Qiming Hou, Kun Zhou

This paper proposes a method for specular reflection rendering in dynamic 3D scenes. Deferred reflection is used to generate high-quality specular reflections at a rate comparable to existing 3D Gaussian splatting methods.

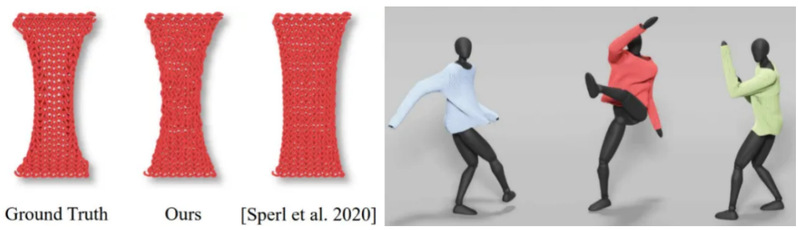

Neural-Assisted Homogenization of Yarn-Level Cloth

Authors: Xudong Feng, Huaming Wang, Yin Yang, Weiwei Xu

This study presents a neural network model for homogenizing yarn-level fabric simulations. The model accelerates physical simulations while maintaining stability, providing two orders of magnitude improvement in computational efficiency.

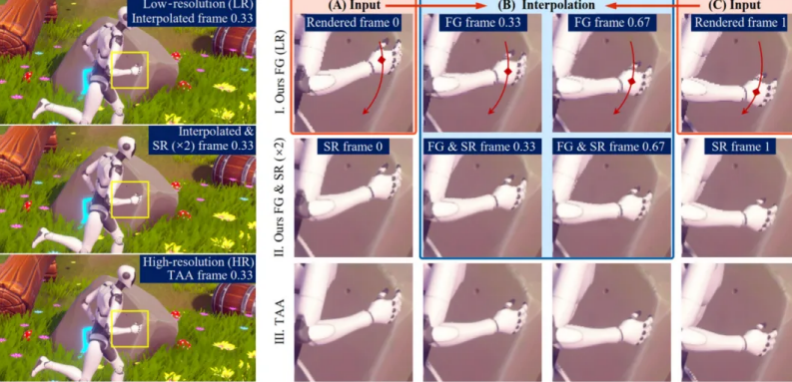

Mob-FGSR: Frame Generation and Super-Resolution for Mobile Real-Time Rendering

Authors: Sipeng Yang, Qingchuan Zhu, Junhao Zhuge, Qiang Qiu, Chen Li, Yuzhong Yan, Huihui Xu, Ling-Qi Yan, Xiaogang Jin

Mob-FGSR introduces a lightweight framework for frame generation and super-resolution on mobile devices. The framework enhances real-time rendering performance without requiring high-end GPUs.