One sentence is all it takes to 'clone' your voice? It’s actually possible!

With AI technology advancing at a rapid pace, a seemingly magical innovation—voice cloning—has quietly begun to reveal its dangerous side. The voice traces we leave behind on social platforms, voice assistants, and other digital spaces have become a "treasure trove" in the eyes of cybercriminals. Using voice cloning technology, they can easily replicate our voices and deploy them in identity theft, online fraud, and other illegal activities. From impersonating family members or friends in urgent situations that require money, to pretending to be a boss demanding funds transfer, fraudsters are increasingly bypassing our psychological defenses with cloned voices. Voice security urgently needs more attention!

Recently, the team from Zhejiang University's State Key Lab of Blockchain and Data Security conducted a comprehensive study. Using voice data from over 7,000 speakers and five major voice cloning tools, they tested eight voice authentication platforms, including those used in smart speakers, social apps, and even banking systems. They also organized listening tests with real users. The results were startling: voice cloning achieved an average attack success rate of over 80%, and human ear differentiation was below 50%, almost like a random guess. The experiment demonstrated that attackers need just a single sample of your voice to "clone" an AI-generated voice that can easily deceive both human listeners and voice authentication systems.

This research has been accepted for presentation at the prestigious IEEE Symposium on Security and Privacy 2025 (IEEE S&P 2025), a leading international conference on information security.

01 Voice cloning technology is simple to use and incredibly cost-effective.

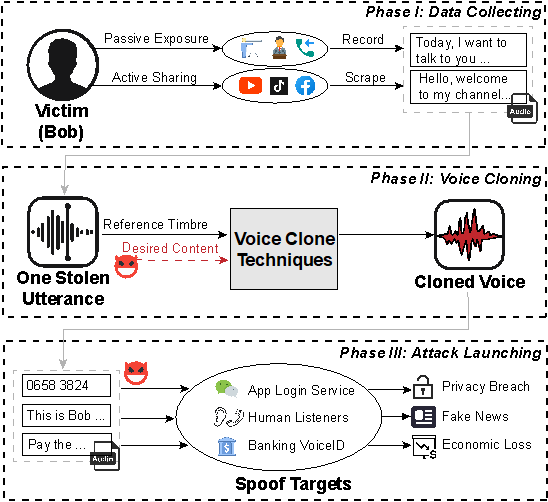

Attackers can easily record or download a short clip of less than 10 seconds of a target’s voice, and with the help of a voice cloning tool, they can generate speech content that sounds like something you’ve "said," but never actually did. This method has successfully bypassed numerous real-world voice authentication systems, including those used in smart speakers, social apps, and even banking systems for transfers and withdrawals.

The Basic Process of Voice Cloning

What’s critical to note is that these cloning technologies are no longer confined to high-tech labs—they've become accessible, widely available, and extremely easy to use. Today, a quick search for "voice cloning" on the internet will yield a plethora of ready-to-use services and open-source frameworks, making it possible for almost anyone to leverage this technology.。

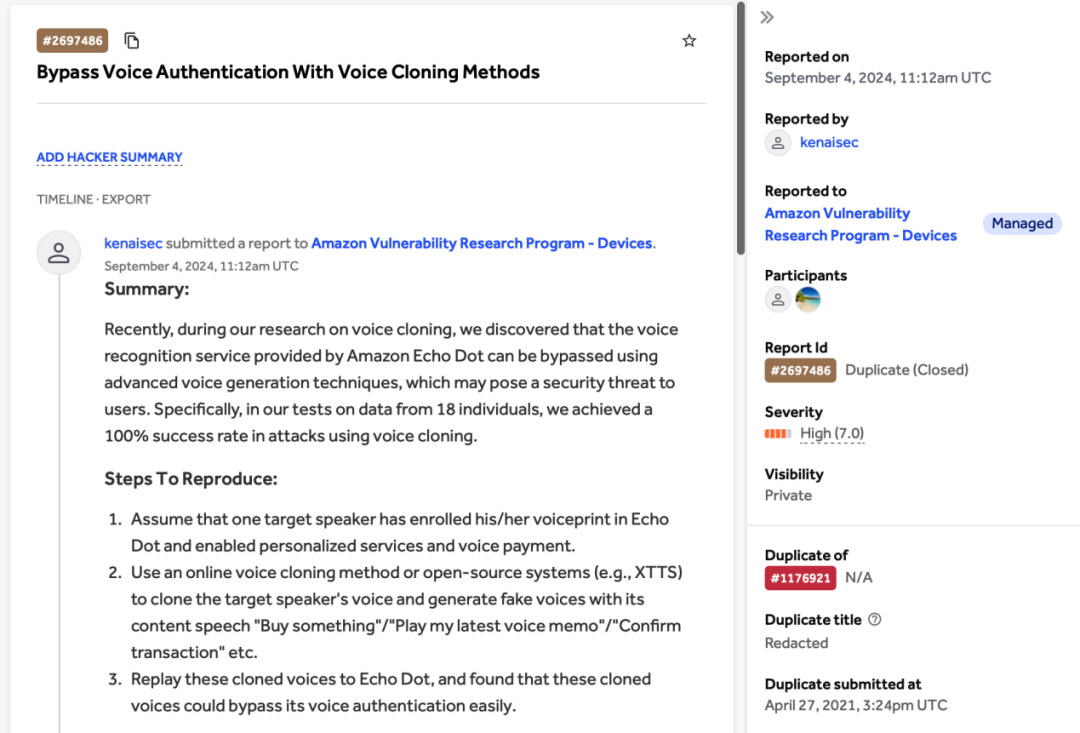

This study evaluated five major open-source and commercial voice cloning technologies, conducting large-scale assessments on eight different voice authentication systems. These systems included multiple smart speakers, commercial voiceprint service providers, social apps, and even a bank’s voiceprint-based transfer and withdrawal system. The research found that more than 80% of cloned voices were successful in bypassing these voice authentication systems, leading to privacy breaches and potential financial losses.

02 Cloned voices sound natural, posing a real threat to human perception.

The research found that not only are deployed voice authentication systems struggling to detect cloned voices, but over 6,000 real-world listening tests also showed that the human ear cannot effectively distinguish between real and cloned voices. The accuracy rate was below 50%, nearly the same as random guessing.。

Real-world cases further demonstrate the risk: attackers have used cloned voices to impersonate company executives, successfully directing employees to transfer millions of dollars. Fraudsters have also mimicked the voices of friends and family to carry out phone scams. In recent years, there have been several incidents worldwide where AI-generated voice fakes have led to election controversies, corporate crises, and even public panic. Even when these incidents are later identified as "synthetic fakes," the damage is often irreversible.

You might not realize it, but a short voice message in a social media post, a customer service call recording, or even a voiceover from a short video platform could all be used by AI to "train" and then impersonate the victim’s voice. The threat of voice cloning is already all around us!

03What Can We Do in the Face of the Security Threat of Voice Cloning?

The research also tested cutting-edge passive voice forgery detection models and active watermarking protection methods. The findings revealed that passive detection models had an equal error rate (EER) of over 16%, while active watermarking technology, in the absence of forged markers added by the attacker, had an EER exceeding 80%. These results indicate that current technologies are generally ineffective at defending against high-quality voice forgery and providing adequate protection. This highlights the significant security vulnerabilities in existing systems when it comes to combating deepfake attacks. So, what can we do to protect our voices??

Firstly, raising awareness about protecting personal voice data is crucial. Avoid sharing personal voice content (especially clear, single-speaker audio) lightly. Be cautious with financial or smart services that rely on voice commands, and if you receive a "voice-based request" from a friend or family member, verify it through text or video.。

Secondly, with the official release of the mandatory national standard "Cybersecurity Technology – Methods for Identifying AI-generated Synthetic Content", the government has introduced clear labeling requirements for synthetic content, including voice, providing a solid foundation for preventing misuse of synthetic content.

Additionally, more investment should be made into research on deepfake detection technologies. Fighting "magic with magic" will help the public better identify the differences between synthetic and real content.

IEEE S&P 2025

The IEEE Symposium on Security and Privacy (IEEE S&P), founded in 1980, is one of the top four international conferences in the field of information security. With an acceptance rate consistently ranging from 12% to 15%, its accepted papers represent the latest advancements and cutting-edge trends in global network and system security research, drawing extensive attention from both academia and industry. In 2025, IEEE S&P received 1,740 submissions, ultimately accepting 257 papers, yielding an acceptance rate of just 14.8%.