Introduction

Imagine being able to write on a screen using nothing but your thoughts. Researchers from the State Key Laboratory of Brain-Computer Intelligence at Zhejiang University have developed a novel brain-computer interface (BCI) decoding framework that reconstructs clear, identifiable handwriting trajectories directly from neural signals in the motor cortex.

This breakthrough extends brain-controlled handwriting from simple alphabet-based systems (like English) to complex, multi-stroke and multi-character systems such as Chinese. The work offers a new communication pathway for individuals with severe motor impairments and marks an important step toward truly “thought-to-text” interaction.

The 2025 International BCI Award recently announced its global list of 12 finalists. Among them is the study “Decoding handwriting trajectories from intracortical brain signals for brain-to-text communication” by Professor Wang Yueming and Dr. Hao Yaoyao’s team at Zhejiang University, published in Advanced Science.Selected from nearly 90 leading projects across 40 countries, this nomination highlights the team’s scientific excellence and China’s growing presence in clinical invasive BCI research.

The International BCI Award, held annually, honors the most innovative and impactful brain-computer interface research worldwide. This nomination recognizes not only the team’s strong research capability but also a new milestone in China’s advancement of neural decoding technology for clinical applications.

This study, jointly conducted by Zhejiang University’s invasive BCI research team and clinical neurosurgery collaborators, is the first to integrate a DILATE (Shape and Time Alignment) loss function into a clinical BCI decoding framework. The approach effectively resolves a long-standing challenge—misalignment between neural activity and intended motor actions caused by patients’ inability to perform actual movements.

By implanting microelectrode arrays in the motor cortex of a participant with spinal cord injury, the team recorded high-resolution intracortical signals as the participant attempted to write Chinese characters. Using an LSTM (Long Short-Term Memory) network combined with the DILATE mechanism, the system successfully reconstructed single-trial handwriting trajectories and recognized them via machine learning with over 90% accuracy across a 1,000-character set.

1Research Background and Core Problem

Traditional BCI (Brain-Computer Interface) systems primarily rely on classifying neural activity to recognize discrete characters (such as English letters). While this approach performs well for limited character sets, it struggles with logographic writing systems like Chinese or Japanese, which contain thousands of unique characters.

Moreover, in clinical BCI settings, patients are unable to perform actual physical movements and can only engage in “attempted writing” guided visually. This results in significant and non-uniform temporal misalignment between neural responses and the reference video cues.

Conventional regression-based decoders, which typically use the Mean Squared Error (MSE) loss function, require strict point-to-point alignment between predicted and true signals. Such rigidity makes them incapable of handling signal distortion, leading to degraded decoding accuracy and distorted trajectory reconstruction -- a major limitation for achieving natural handwriting communication through BCIs.

2Research Methods and Key Findings

In this study, a participant with C4-level spinal cord injury and complete paralysis of both hands was recruited. Two Utah electrode arrays were implanted in the hand area of the participant’s left motor cortex. During the experiments, the participant watched videos displaying the process of handwriting and attempted to write 180 commonly used Chinese characters with their right hand as if holding chalk (each character repeated three times).

We recorded multi-band neural signals and extracted multiple types of features, including local field potentials (LFPs), single-unit activity (SUA), multi-unit activity (MUA), and entire spiking activity (ESA).

The core innovation of this research lies in the introduction of the DILATE loss function, which consists of two components:(1)Shape Loss – based on differentiable soft Dynamic Time Warping (soft-DTW), it optimizes the overall shape similarity between predicted and true sequences.(2)Time Loss – constrains the alignment path to prevent excessive temporal distortion.

An LSTM (Long Short-Term Memory) network was used to decode writing velocity, and trajectories were reconstructed through integration. The decoded results were then recognized using both general handwriting recognition software and a custom template-matching system based on Dynamic Time Warping (DTW).

Figure 1.

A. Experimental setup: a paralyzed participant with implanted electrode arrays imagines writing Chinese characters while following the on-screen animation.

B. Sequence of the Chinese character handwriting animation displayed on the screen.

C. Example of reconstructed Chinese character trajectories.

D. Example of reconstructed English letter trajectories.

Research Findings

(1)Significant improvement in single-day decoding performance with the DILATE framework:

Compared with the traditional MSE approach, DILATE notably reduced the DTW distance of decoded trajectories (5.35 vs. 5.73), achieving higher shape fidelity. The recognition accuracy using general handwriting recognition software increased from 27.2% to 37.2%. In a 180-character library, the DTW-based template recognition accuracy reached 81.7%, and it remained 70.6% even when expanded to a 1,000-character set.

(2)Enhanced decoding performance through multi-day data fusion:

After integrating data from six recording sessions, the DILATE decoder’s DTW distance further decreased to 4.35, while recognition accuracy using general software improved to 52.2%. In the 1,000-character library, the recognition rate reached an impressive 91.1%, significantly outperforming single-day decoding.

(3)Cross-language and cross-dataset validation:

Applying DILATE to a public English handwriting dataset achieved a 36.47% single-trial trajectory recognition rate (compared to 22.93% with MSE). Clustering analyses demonstrated that DILATE consistently outperformed MSE across all structural consistency metrics, including FMI, ARI, and AMI.

(4) Real-time performance and noise robustness:

Pseudo-online experiments showed that the system’s decoding rate could reach ≥250 Hz, meeting real-time BCI requirements. Moreover, DILATE exhibited strong robustness to noise, making it well-suited for handling signal fluctuations in clinical environments.

3Significance and Future Applications

This research demonstrates, for the first time, single-trial decoding of complex handwriting trajectories from intracortical signals and their successful translation into text. The DILATE framework addresses the long-standing “neural-signal-to-label mismatch” problem, offering new insights into the neural basis of motor imagery and advancing multi-session data fusion for BCI systems.

The results pave the way for natural, efficient, and personalized BCI handwriting systems for paralyzed individuals, supporting multilingual writing—including ideographic languages such as Chinese. Future extensions may enable fine-motor control for drawing, signing, or artistic creation, establishing a foundation for the clinical translation of next-generation implantable BCIs.

4Neuralink’s Breakthrough

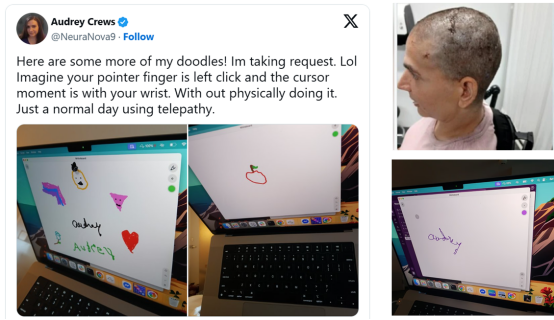

Notably, Neuralink, the brain-computer interface company founded by Elon Musk, recently reported its first female patient, Audrey Crews, successfully performing “mindwriting” using an implanted N1 chip. Audrey was able to write her name and draw shapes such as hearts, flowers, and rainbows purely through thought, as well as scroll pages and type on a keyboard.

“For the first time since I became paralyzed at 16, I can write again—even if it’s through brainwaves,” she shared. “I have so much to say. Maybe I’ll write a book.”

Figure 2. Audrey Crews writes her name using the implanted N1 chip.

Both Neuralink’s clinical progress and Zhejiang University’s research highlight a shared vision: handwriting and drawing directly through neural decoding are rapidly becoming reality. The next generation of BCI systems will not only restore communication but also rebuild the human capacity for expression and creativity.

This work was jointly carried out by the National Key Laboratory of Brain-Computer Intelligence, Zhejiang University, the Nanhu Institute for Interdisciplinary Brain-Computer Research, the College of Computer Science and Technology, the College of Biomedical Engineering and Instrument Science, and the Second Affiliated Hospital of Zhejiang University School of Medicine.

Dr. Hao Yaoyao from the State Key Laboratory of Brain-Computer Intelligence and Professor Wang Yueming from the College of Computer Science and Technology served as co-corresponding authors of the paper. PhD candidate Xu Guangxiang from the College of Biomedical Engineering and Instrument Science was the first author, with PhD candidate Wang Zebin also making significant contributions.

The study was jointly supervised by Professor Xu Kedi, Dr. Zhang Jianmin, and Dr. Zhu Junming from the Second Affiliated Hospital, together with Professor Xu Kedi from the College of Biomedical Engineering and Instrument Science.

This research was supported by the National Key R&D Program of China (Brain Science and Brain-like Research Initiative, 2030), the National Natural Science Foundation of China, and the Zhejiang Provincial Frontier Innovation Project.

Full paper:https://doi.org/10.1002/advs.202505492