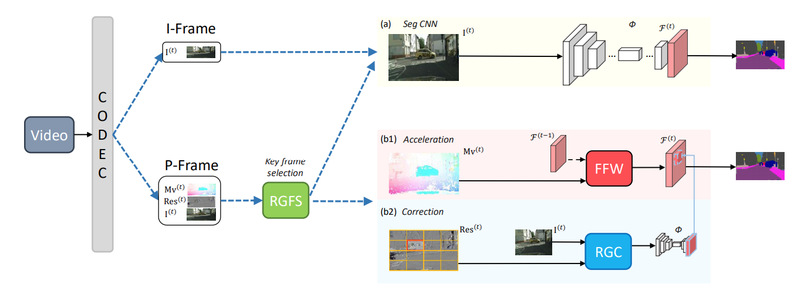

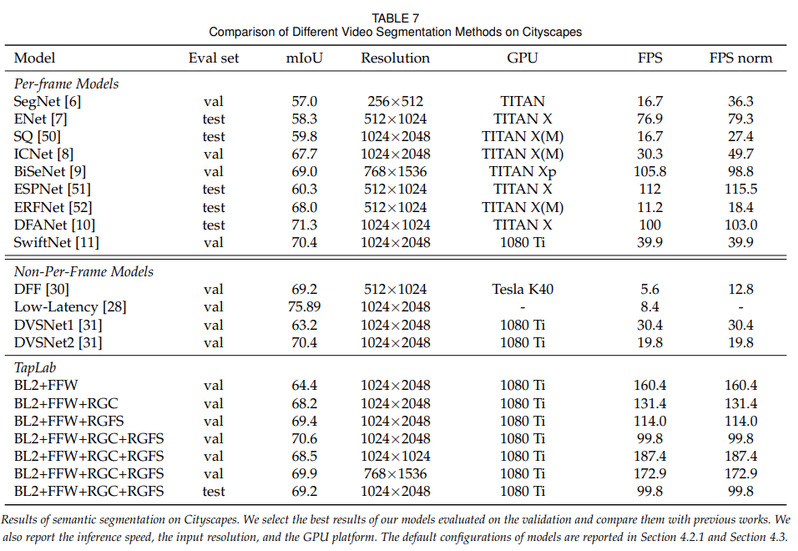

The research led by Prof. Xi Li focuses on real-time video semantic segmentation based on neural network in the era of 5g high-definition video (such as 1080p or 4K). The team innovatively proposes a pipeline using compressed domain knowledge to efficiently assist deep learning segmentation. The algorithm embeds the existing video coding information (such as motion information and residual information) into the deep learning framework seamlessly, and performs global cross frame segmentation propagation and local residual region segmentation correction operations at the same time, which effectively gets rid of the expensive optical flow calculation. In the case of comparable performance, using the algorithm on 1080ti single card to 1024 × 2048 resolution video can achieve a performance of 160+ FPS at its quickest, which yields significant value for practical application.

After huge amount of engineering efficiency optimization and practical deployment, the work has been successfully applied. Link to the open-source code: https://github.com/dcdcvgroup/TapLab

Full paper at: https://ieeexplore.ieee.org/document/9207876

This paper was supported by CCST & Shanghai Institute of advanced research